How to Set Up Nvidia GPU-Enabled Deep Learning Development Environment with Python, Keras and TensorFlow

Discovering GPU-friendly Deep Neural Networks with Unified Neural Architecture Search | NVIDIA Technical Blog

GitHub - zia207/Deep-Neural-Network-with-keras-Python-Satellite-Image-Classification: Deep Neural Network with keras(TensorFlow GPU backend) Python: Satellite-Image Classification

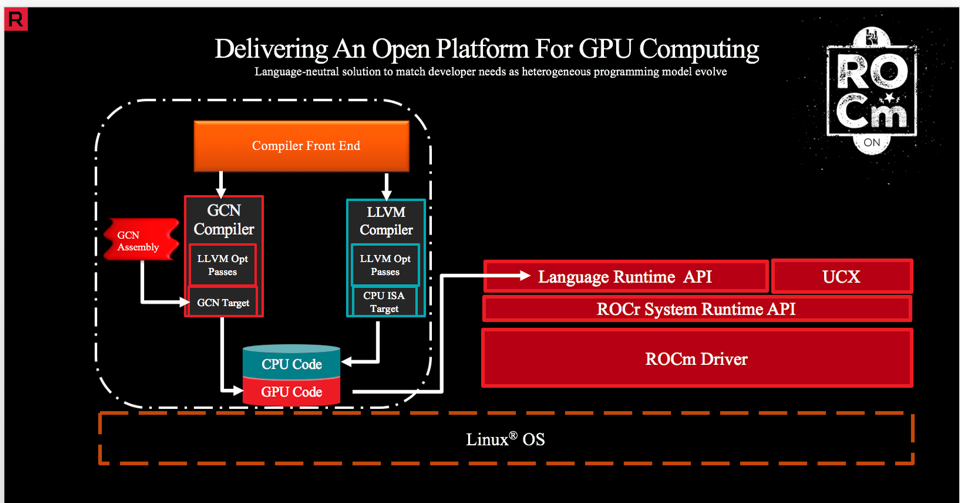

AITemplate: a Python framework which renders neural network into high performance CUDA/HIP C++ code. Specialized for FP16 TensorCore (NVIDIA GPU) and MatrixCore (AMD GPU) inference. : r/aipromptprogramming

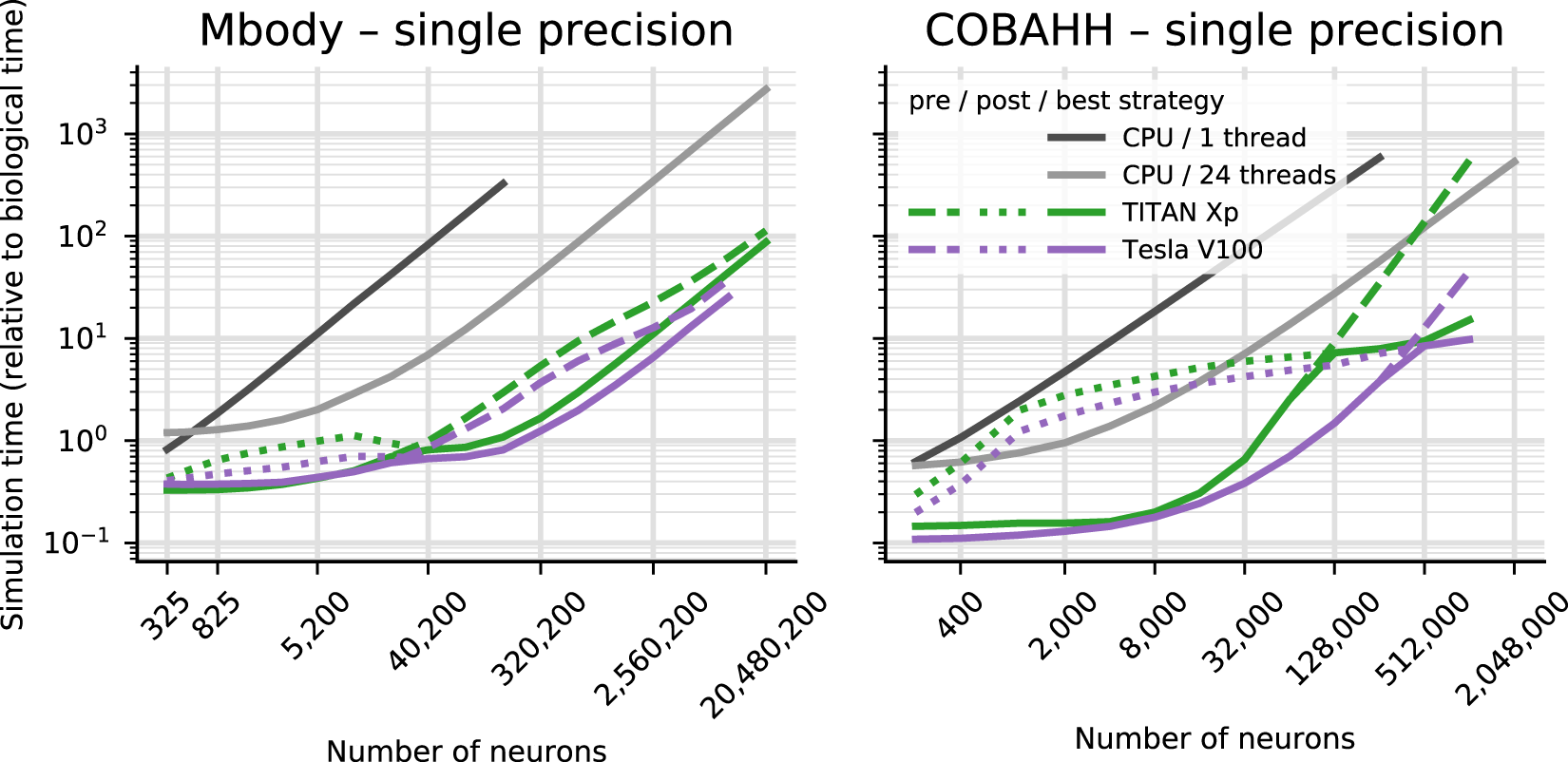

Brian2GeNN: accelerating spiking neural network simulations with graphics hardware | Scientific Reports

Why is the Python code not implementing on GPU? Tensorflow-gpu, CUDA, CUDANN installed - Stack Overflow

Parallelizing across multiple CPU/GPUs to speed up deep learning inference at the edge | AWS Machine Learning Blog

GitHub - zylo117/pytorch-gpu-macosx: Tensors and Dynamic neural networks in Python with strong GPU acceleration. Adapted to MAC OSX with Nvidia CUDA GPU supports.

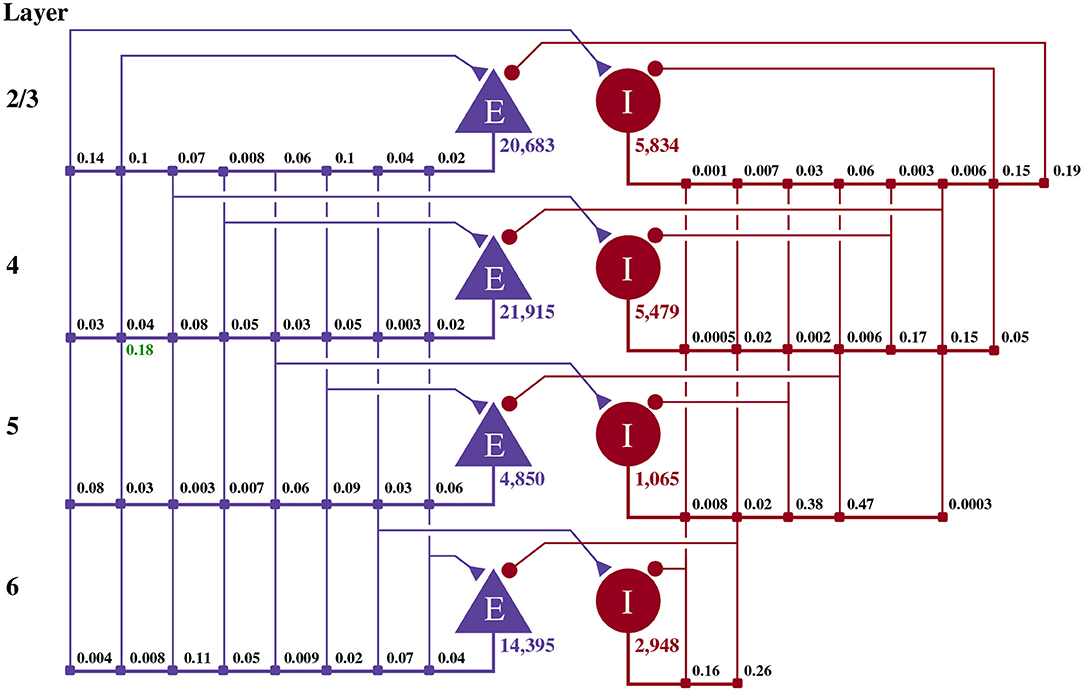

Optimizing Fraud Detection in Financial Services with Graph Neural Networks and NVIDIA GPUs | NVIDIA Technical Blog

GitHub - pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration

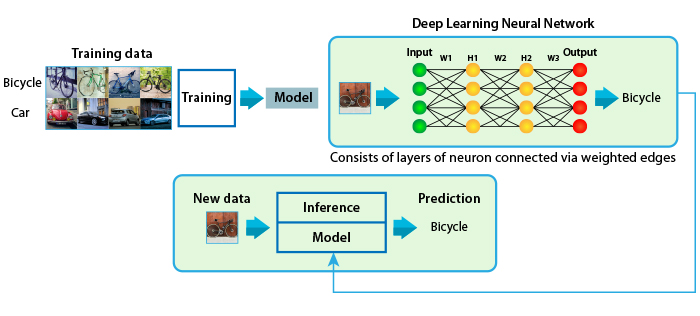

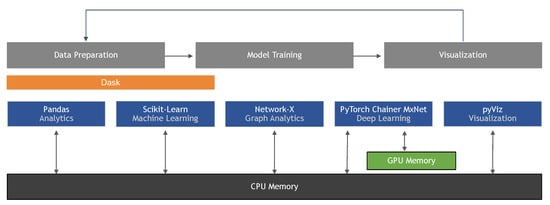

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence